Serwer HPE ProLiant DL385 Gen11 GPU CTO z 8 × NVIDIA H200 NVL — platforma AI o dużej gęstości

- Kategorie produktów:Serwery

- Numer katalogowy:HPE ProLiant DL385 Gen11

- Dostępność:In Stock

- Stan:Nowy

- Cechy produktu:Gotowy do wysyłki

- Minimalne zamówienie:1 szt.

- Cena katalogowa wynosiła:$129,999.00

- Twoja cena: $106,365.00 Oszczędzasz $23,634.00

- Czat teraz Wyślij e-mail

Oddychaj spokojnie. Przyjmujemy zwroty.

Wysyłka: Międzynarodowa wysyłka produktów może wiązać się z procedurami celnymi oraz dodatkowymi opłatami. Zobacz szczegóły

Dostawa: Proszę o dodatkowy czas, jeśli międzynarodowa dostawa podlega procedurze celnej. Zobacz szczegóły

Zwroty: 14 dni na zwroty. Sprzedawca pokrywa koszt zwrotu. Zobacz szczegóły

Darmowa wysyłka. Akceptujemy zamówienia zakupu NET 30 dni. Otrzymaj decyzję w kilka sekund, bez wpływu na Twoją zdolność kredytową.

Jeśli potrzebujesz dużej ilości produktów HPE ProLiant DL385 Gen11 - zadzwoń do nas na bezpłatny numer WhatsApp: (+86) 151-0113-5020 lub poproś o ofertę na czacie na żywo, a nasz menedżer sprzedaży skontaktuje się z Tobą wkrótce.

HPE ProLiant DL385 Gen11 GPU CTO Server with 8 × NVIDIA H200 NVL — High-Density AI Platform

Keywords

HPE ProLiant DL385 Gen11, GPU server, AMD EPYC 9534, NVIDIA H200 NVL, PCIe Gen5 expansion, high memory capacity, NVMe accelerator storage, dual 1800W power supply

Description

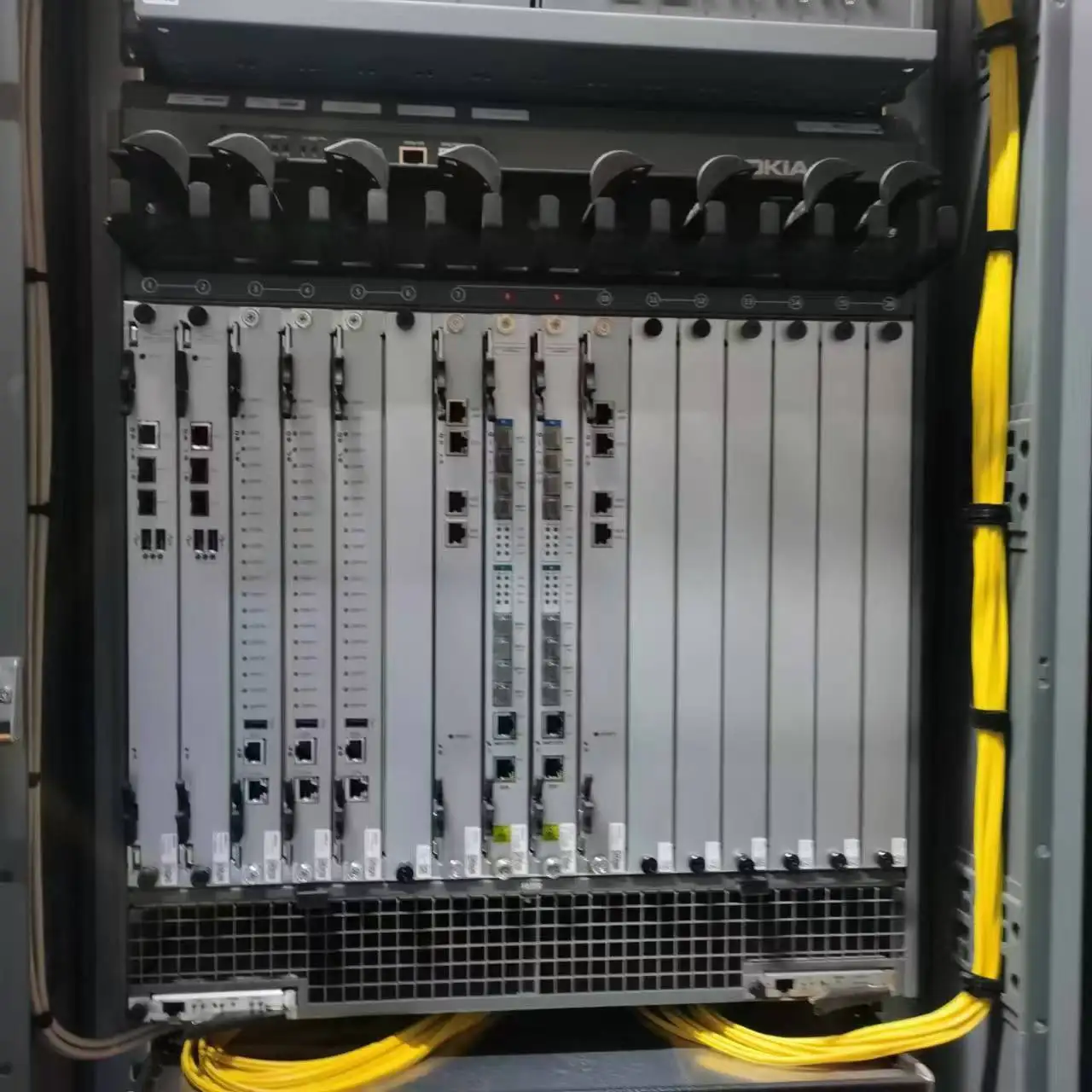

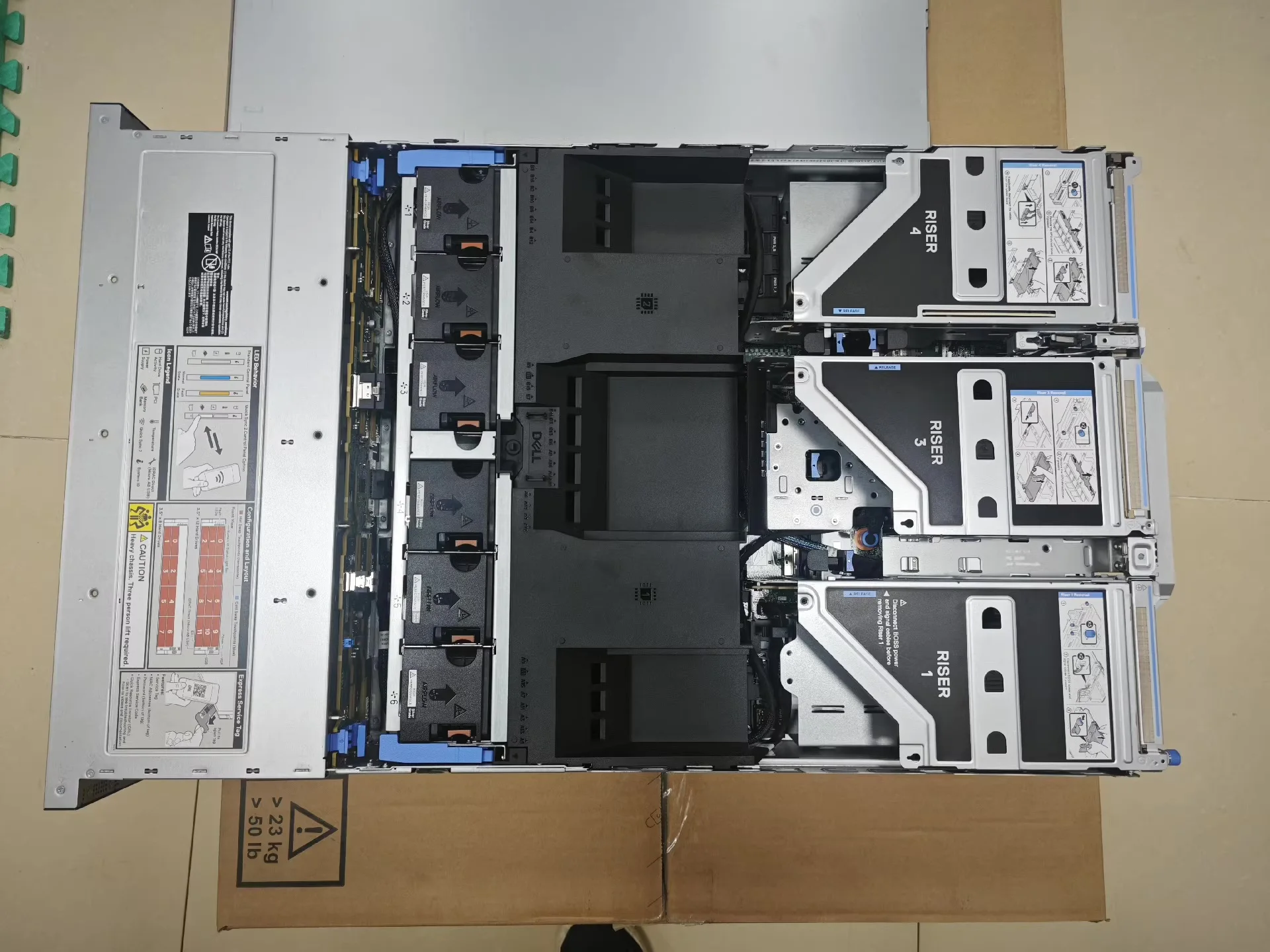

The **HPE ProLiant DL385 Gen11 GPU CTO Server** is a 2U dual-processor, accelerator-optimized server designed for next-generation AI, machine learning, and high-performance computing workloads.

This configuration features **2 × AMD EPYC 9534** (64 cores each) for a total of **128 CPU cores**, delivering massive parallel compute capacity.

With support for up to **8 × NVIDIA H200 NVL 141 GB** accelerators, this system is tailored for dense GPU workloads.

The server also includes enterprise-class networking, storage, and management components to support demanding deployment environments.

Memory is provided by **8 × 64 GB DDR5 ECC** modules, giving a total of **512 GB** of high-speed RAM to support GPU peer transfers, data pipelines, and in-memory operations.

Storage is handled by **8 × 3.84 TB SAS 12G read-intensive SSDs** for capacity, and redundant boot or cache support from **2 × 480 GB M.2 SSDs (RAID)**.

The system also includes a **Broadcom BCM57412** 10/25 GbE NIC, 4 × 1 GbE base-T ports, and HPE’s **MR416i-0** storage controller for flexible I/O paths.

For power and reliability, this build uses **4 × 1800–2200 W Flex Slot Titanium hot-plug power supplies** in a redundant configuration, enabling continuous operation even in fault conditions.

To ensure GPU and component cooling, HPE’s GPU-capable airflow and thermal solutions (air baffle kits and heat sink kits) are part of the build.

The system is mounted using the HPE **ball bearing rails** kit for data center rack integration.

In summary, this configuration is crafted to serve as a high-density inference, training, or AI server platform that balances CPU, GPU, memory, and IO capabilities in a 2U form factor.

It is ideal for deployment in GPU-dense clusters or as a standalone compute node for demanding workloads.

Key Features

- Dual **AMD EPYC 9534** processors — 64 cores each, high throughput per socket

- Support for **8 × NVIDIA H200 NVL 141 GB** accelerators (single-width) (Part Number: 900-21010-0040-000 / S3U30C)

- High GPU interconnect via **4 × NVIDIA 2-Way NVLink** bridges (Part Number: 900-53651-0000-000)

- 512 GB DDR5 ECC memory (8 × 64 GB modules) for balanced CPU–GPU feeding

- 8 × 3.84 TB SAS 12G read-intensive SSDs for capacity storage

- 2 × 480 GB M.2 SSDs in RAID for boot/metadata redundancy

- Broadcom BCM57412 10/25 GbE NIC for high-speed networking

- 4 × 1 GbE Base-T ports for management or legacy connectivity

- HPE MR416i-0 storage controller with 8 GB cache and OCP SPDM form factor

- 4 × 1800–2200 W Flex Slot Titanium redundant power supplies

- HPE GPU airflow, heat sink, and air baffle kits for optimal thermal management

- Ball-bearing 2U rails for smooth rack installation and servicing

Configuration

| Quantity | Part Number | Description / Role |

|---|---|---|

| 1 | P54198-B21 | HPE ProLiant DL385 Gen11 GPU CTO Server (base chassis) |

| 2 | P53699-B21 | AMD EPYC 9534 64-core 2.45 GHz processors |

| 8 | P50312-B21 | 64 GB DDR5 ECC RDIMM modules |

| 8 | P40508-B21 | 3.84 TB SAS 12G read-intensive SSDs |

| 1 | P26259-B21 | Broadcom BCM57412 10/25 GbE network adapter (PCIe x8) |

| 1 | P01367-B21 | HPE 96W Smart Storage Battery |

| 2 | P57884-B21 | Smart Storage Battery 2P for DL3X5 chassis |

| 1 | P69872-B21 | DL385 Gen11 GPU storage battery |

| 1 | P47781-B21 | HPE MR416i-0 Gen11 OCP SPDM storage controller (8 GB cache) |

| 1 | P51181-B21 | Broadcom BCM5719 1 GbE 4-Port OCP3 Adapter |

| 2 | S3U30C | NVIDIA H200 NVL 141 GB accelerators |

| 4 | P44712-B21 | HPE 1800–2200 W Flex Slot Titanium power supplies |

| 2 | 455883-B21 | HP 10 Gb SR SFP transceivers |

| 1 | P69868-B21 | ProLiant DL3X5 Gen11 GPU module placeholder |

| 2 | P82106-B21 | 12-pin GPU power cable kit |

| 1 | P82523-B21 | GPU blank kit (slots unused) |

| 1 | P57886-B21 | Air baffle kit for GPU airflow |

| 1 | P52345-B21 | Ball bearing 2U rail kit |

| 1 | P58459-B21 | Performance heat sink kit (2U chassis) |

Compatibility

The **HPE ProLiant DL385 Gen11** platform supports up to 8 **single-wide GPUs** or 4 **double-wide GPUs** in its accelerator-optimized configuration, using rear riser slots designed for GPU expansion.

It supports AMD EPYC 9004/9005 series processors (e.g. EPYC 9534) with PCIe Gen5 and high memory bandwidth, enabling maximum throughput to accelerators and storage.

Memory support is DDR5 ECC across multiple channels per socket, allowing large capacity and performance scaling.

For cooling and layout, GPUs must be installed in the designated GPU cages and are not supported in general rear PCIe slots in the GPU-CTO variant.

The base server also supports boot storage via M.2 or boot-optimized storage (e.g. HPE “Smart” storage batteries), and OCP / PCIe NICs for networking flexibility.

Usage Scenarios

This configuration is exceptional for **large-scale model training and inference**, where multiple GPUs process massive workloads in parallel. The strong CPU base enables efficient data feeding, orchestration, and preprocessing.

It fits **multi-tenant AI clusters**, where each node must provide performant GPU slicing or partitioning for varied workloads. The integrated networking and storage support ensure low-latency data exchange across nodes.

In **HPC / simulation tasks**, portions of the workload may run on CPUs while heavy matrix or compute kernels leverage GPU acceleration. The design supports both hybrid CPU+GPU pipelines effectively.

It’s well suited as a **deep learning inference appliance** in production environments, where deterministic performance, redundancy, and fault tolerance are essential. The redundant power and thermal management features help maintain continuous operation.

Use in **edge AI clusters**, **data center AI farms**, or **rendering farms** is also applicable—especially in scenarios demanding both high compute density and manageable rack space constraints.

Frequently Asked Questions (FAQs)

1. How many GPUs can this DL385 Gen11 server support?

The DL385 Gen11 GPU CTO variant supports up to **8 single-wide GPUs** or **4 double-wide GPUs**, provided they are placed in the specified GPU cage slots.

2. Why choose EPYC 9534 for a GPU server?

EPYC 9534 offers high core count and memory bandwidth, which helps feed multiple GPUs concurrently. Its support for PCIe Gen5 ensures each GPU has ample I/O channels.

3. What is the role of the MR416i-0 controller in this build?

The **HPE MR416i-0** is an OCP SPDM storage controller used to manage attached storage or caching layers. In this configuration, it can oversee the SAS SSD pool, provide RAID, caching, or pass-through modes to optimize I/O performance.

4. Are the installed power supplies adequate for full GPU load?

Yes, the 4 × 1800–2200 W Flex Slot Titanium PSUs in this build are designed to supply sufficient power even under full GPU, CPU, and storage load. They operate redundantly, meaning the server continues functioning even if one unit fails.

5. Can I add or upgrade GPUs later?

Yes—provided there is physical and electrical space in the GPU cage, adequate cooling, and power headroom. However, ensure you maintain correct GPU spacing, airflow baffles, and power cable availability.

PRODUKTY ZWIĄZANE Z TYM ARTYKUŁEM

-

Serwer Inspur NF5280M6 obsługujący technologię AI ... - Numer katalogowy: Inspur NF5280M6...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$13,999.00

- Twoja cena: $11,099.00

- Oszczędzasz $2,900.00

- Czat teraz Wyślij e-mail

-

Inspur NF5466M6 Dual Intel Xeon 4314 Enterprise St... - Numer katalogowy: Inspur NF5466M6...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$23,499.00

- Twoja cena: $19,750.00

- Oszczędzasz $3,749.00

- Czat teraz Wyślij e-mail

-

Dell PowerEdge R760xs — konfiguracja korporacyjna ... - Numer katalogowy: Dell PowerEdge R760x...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$9,999.00

- Twoja cena: $7,699.00

- Oszczędzasz $2,300.00

- Czat teraz Wyślij e-mail

-

Dell PowerEdge R760xs — wydajny serwer Xeon Gold 6... - Numer katalogowy: Dell PowerEdge R760x...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$9,999.00

- Twoja cena: $8,808.00

- Oszczędzasz $1,191.00

- Czat teraz Wyślij e-mail

-

Serwer Dell PowerEdge R660 o wysokości 1U do monta... - Numer katalogowy: Dell PowerEdge R660...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$31,299.00

- Twoja cena: $25,736.00

- Oszczędzasz $5,563.00

- Czat teraz Wyślij e-mail

-

Serwer HPE ProLiant DL380 Gen11 2U do montażu w sz... - Numer katalogowy: HPE ProLiant DL380...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$24,899.00

- Twoja cena: $22,858.00

- Oszczędzasz $2,041.00

- Czat teraz Wyślij e-mail

-

Serwer pamięci masowej Inspur NF8480M5 4U dla prze... - Numer katalogowy: Inspur NF8480M5...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$24,999.00

- Twoja cena: $22,499.00

- Oszczędzasz $2,500.00

- Czat teraz Wyślij e-mail

-

Lenovo ThinkSystem SR850 V3 Wysokowydajny 4-proces... - Numer katalogowy: Lenovo ThinkSystem S...

- Dostępność:In Stock

- Stan:Nowy

- Cena katalogowa wynosiła:$45,799.00

- Twoja cena: $38,499.00

- Oszczędzasz $7,300.00

- Czat teraz Wyślij e-mail